During the Empirical Methods in Natural Language Prorcessing Conference, MIT researched introduced an innovative idea of a parser that mimic a child’s language-acquisition process. According the presenters, a parser is capable of learning and developing its own capabilities through observation.

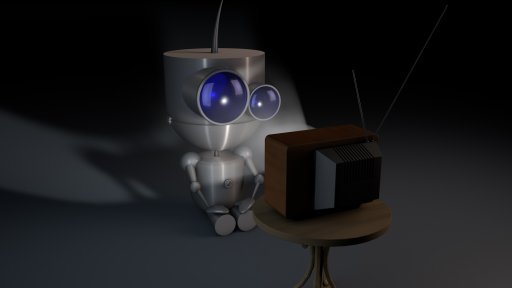

Such parsers are becoming increasingly important for web searches, natural-language database querying, and voice-recognition systems such as Alexa and Siri. It is expected that they will soon find their application also as equipment for home robotics or for improvement of the natural interaction between humans and personal robots.

Passive Observers and Visual Learns

A parser gathers information about the structure of a language by observing captioned videos as a result of which it starts associating the words with recorded objects and actions. When approached with an unknown sentence, based on the information that it has gathered during the training, the parser can predict what is the meaning of the new sentence without the need of a video.

However, if the parser find it difficult to understand a particular word, sentence or phrase, say, an action or object in a sentence, it can reference the video to clear things up. ““By assuming that all of the sentences must follow the same rules, that they all come from the same language, and seeing many captioned videos, you can narrow down the meanings further,” says co-author of the paper Boris Katz, a principal research scientist and head of the InfoLab Group at CSAIL.

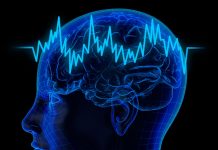

The syntactic and semantic parsers are trained on sentences annotated by humans and can clearly differentiate the structure and meaning behind the taught words. The parsers can learn a new language just as children do: children acquire new language skills by observing the environment and absorbing words and naturally a language’s word order from the speech of people around them.

“A child has access to redundant, complementary information from different modalities, including hearing parents and siblings talk about the world, as well as tactile information and visual information, [which help him or her] to understand the world,” Barbu says.

Co-authors of the paper are Candace Ross, a graduate student in the Department of Electrical Engineering and Computer Science and CSAIL, and a researcher in CBMM; Yevgeni Berzak , a postdoc in the Computational Psycholinguistics Group in the Department of Brain and Cognitive Sciences; and CSAIL graduate student Battushig Myanganbayar.

The research was supported by the CBMM, the National Science Foundation, a Ford Foundation Graduate Research Fellowship, the Toyota Research Institute, and the MIT-IBM Brain-Inspired Multimedia Comprehension project.